How many concurrent users can a web server handle?

If you know how many concurrent users a web server handles, and the average transaction time or visit duration, from the first page in your transaction flow to the order confirmation page, you can convert this into a Queue Rate using Little's Law by dividing the number of users by the duration, like this:

Queue rate = Concurrent users / Transaction time

How accurate is a rate-based queue system?

Queue-Fair will deliver visitors to your website at the rate you specify - we have by far the most accurate Queue AI in the business to ensure that the the number of visitors you want each minute is the number of visitors you get each minute, accounting automatically for people who are not present when their turn is called, as well as people who come back late.

How does this translate into the number of Concurrent Users? Of course, not every visitor who reaches your site will take the exact average transaction time to complete their transaction, but you will get a very steady number of Concurrent Users with Queue-Fair, because of the Law of Large Numbers.

For example, let's say you have a Queue Rate of 100 visitors per minute. We'll send 100 visitors to your site each and every minute in a steady stream - that's what we do and we're stunningly good at it. Let's also say that people use your website for an average (mean) of five minutes, with 70% of them taking between 4 and 6 minutes from the moment they are passed by the queue to the moment they make their last page request (whether or not they complete a transaction). That's a Standard Deviation of one minute either side of the mean. Statistically speaking, that means for every visitor that takes five and a half minutes, there's going to be another that takes four and a half, and these variations in individual visit durations across multiple sessions therefore tend to cancel each other out when you're counting lots of them in any way. The Law of Large Numbers says that this cancelling out becomes more and more exact the larger the number of people involved.

How exact, exactly? We can work that out with a little statistics. There's a sample size of 5 * 100 = 500, which is the Large Number involved here. That's how many people you're counting. This means the Standard Error in the Mean for the transaction time is 1 (the standard deviation, 1 minute) divided by the square root of the sample size (so the square root of 500) according to the statistical formula for Standard Error in the Mean, which gives a Standard Error in the Mean for the transaction time of 0.044 minutes, or just 2.7 seconds, which is less than one percent.

This means with a Queue Rate of 100, and a transaction time of 5 minutes give or take a minute for each individual visitor, you should expect between 495 and 505 concurrent users on your site around 70% of the time, so the math says using a rate-based queue will deliver a very steady load on your webservers as desired.

But is the math accurate? There are some subtleties here - for example, the sample size that we are merrily square-rooting isn't always exactly 500 every time the Concurrent Users are counted (i.e. at any given moment in time), and also a normal (Gaussian) distribution can give negative transaction times which don't occur in real life. So, we use a visitor-by-visitor, second-by-second simulator to make measurements to check these kinds of calculations, and that tells us that with the above figures, you should expect between 493 and 507 visitors 70% of the time, so the math holds up remarkably well! Measuring the data also tells us that your site will have 500 ± 15 Concurrent Users at least 95% of the time.

That's probably more steady than the accuracy with which your web server can measure the number of people using your site! Even better, the really neat thing here is that even if you have no idea what the mean transaction time or standard deviation are for your visitors, these mathematical quantities exist whether you know them or not, and you'll get a stable load anyway.

The upshot is that Queue-Fair will deliver the number of visitors per minute that you want with pretty much perfect accuracy, resulting in a very steady number of concurrent users on your site, and a stable web server load over which you have total control.

Hooray!

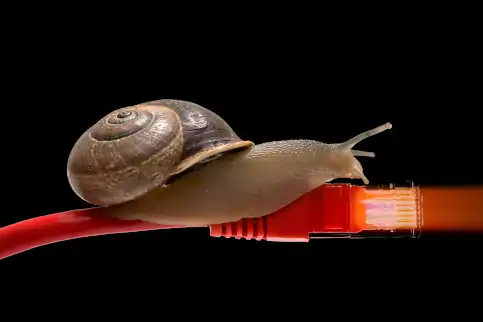

![servers capacity can be exceeced with inaccurate queues]() And now a warning. It is worth noting that the stability of the number of concurrent users on your site - and therefore the stability of your server load - does depend critically on how accurately your Virtual Waiting Room provider sends you the number of visitors that you want each minute, and this is therefore a key factor when you choose a Virtual Waiting Room platform. Because we provide the most accurate Virtual Waiting Room in the world, nobody stops your servers flooding better than Queue-Fair.

And now a warning. It is worth noting that the stability of the number of concurrent users on your site - and therefore the stability of your server load - does depend critically on how accurately your Virtual Waiting Room provider sends you the number of visitors that you want each minute, and this is therefore a key factor when you choose a Virtual Waiting Room platform. Because we provide the most accurate Virtual Waiting Room in the world, nobody stops your servers flooding better than Queue-Fair.

An Easy Way To Calculate the Queue Rate

What if you don't know how many Concurrent Users a server can handle, or Transaction Time? You can look at the page that is likely to be your bottleneck - usually the one that is the result of clicking a "Buy Now" button. Use Google Analytics to find the monthly unique visitors to that page, or count your monthly orders. Divide this by 30 * 24 * 60 = 43,200 which is the number of minutes in a month (approximately). That's your average visitors per minute over the whole month. Multiply this by three. That's your average visitors per minute during business hours (approximately). Double this. That's probably a safe figure for the Queue Rate to use.

For example, let's say you process 100,000 orders per month - that's 100,000 clicks of the "Buy Now" button. That's 100,000 / 43,200 = 2.31 orders per minute. You would expect most of these orders to be during the day, and your servers to be quieter at night, so multiply this by 3 and that's 7 orders per minute as a rough estimate of how busy your server is during business hours. If the resulting figure is less than 50: there will be peaks and troughs in demand, so if your server is not noticeably slow in peak hours, multiply this by 2 to get 14 active users per minute. If the figure is more than 50: minute to minute peaks and troughs will be smaller in comparison, and it's not safe to double this. The number you end up with is probably a safe figure for the Queue Rate to start with and corresponds to how many requests per second you can safely manage; you can always increase it if you find your systems are still responsive for end user performance at that rate.

If your orders are timestamped, you can also look at the maximum orders you took in a single minute in the last month - but use with caution as you won't know how many orders you may have dropped during this minute due to your servers slowing, so reduce this by 20%.

The rest of this article discusses some other ways to work out the Queue Rate.

Gotcha #1: Concurrent Users vs Concurrent Requests vs Concurrent Connections vs Concurrent Sessions

It's worth pointing out that there are at least two definitions of "Concurrent Users" in common usage.

We use the definition, ‘the number of people engaged in a transaction flow at any one time’. That's the key number you need to know to set the Queue Rate. That's how many users are viewing your site right now. The number of Concurrent Sessions is usually somewhat larger than the number of concurrent connections or concurrent users, because some of the sessions are in the process of timing out, increasing the average session duration.

Contrast this with how many Concurrent Requests, which is the number of HTTP requests being processed by your web server at any one time. Very confusingly, a lot of tech people will mean how many Concurrent Requests when they say how many Concurrent Users.

Then there's Concurrent Connections (or concurrent TCP connections to the same server port on your network interface card), which is the number of TCP/IP Sockets open on your server port or backend service at any one time. When making page requests, browsers will by default leave the connection open in case any further requests are made by the page, or the user goes to a different page. This reduces the number of requests per second to open new TCP/IP connections, making the server process more efficient. Timeouts for these concurrent connections vary by browser, from 60 seconds to never-close. Your server may automatically close connections after a period of no activity too. On Linux webservers you can get a count of Concurrent Connections to the same server port with this command:

netstat -aenp | grep ":80 \|:443 " | wc -l

which you can try if you're curious. Again, some people call this "Concurrent Users" too, when it really means concurrent connections.

Indeed if you ask your hosting provider to tell you the maximum number of Concurrent Users that your web server handles (how much peak traffic), they will probably actually give you a figure for Concurrent Sessions, Concurrent Requests or Concurrent Connections, for the simple reason that they don't know your average transaction time, number of pages in your transaction flow, or any of the other information that would allow them to tell you how many simultaneous users your web server handles. All of these numbers have different values.

If you are asking your hosting provider or tech team for information about maximum traffic levels, it's super-important that you clarify whether they mean Concurrent Users, Concurrent Sessions, Concurrent Requests or Concurrent Connections.

Getting this wrong can crash your web site!

Here's why. Each page is a single HTTP request, but all the images, scripts and other files that come from your web application that the browser uses to display the page are also HTTP requests.

Let's imagine you've been told by your tech team that the server supports 500 Concurrent Users, but they actually mean 500 Concurrent Requests. With your 5 minute transaction time, you use the above formula and assume that your site can support 100 visitors per minute.

Can it? No.

As people go through the transaction flow, they are only actually making requests from your servers while each page loads. This affects how much traffic per second or active users your server can handle. Out of the five minute transaction time, that's only a few seconds for an average user. You might therefore think that 500 Concurrent Requests means you can handle a lot more Concurrent Users, but you may well be wrong. Can you see now how understanding your website capacity in terms of how much traffic or total number of active users is such a complicated business?

Converting Concurrent Requests to Concurrent Users

To work out your maximum Concurrent Users from your maximum total number of Concurrent Requests, you also need to know

- The number of pages in your transaction flow

- The average visitor transaction time from first page to last page in your flow

- How many requests make up each page, on average

- The average time your server takes to process a single HTTP request

You probably know 1) and 2) already - in our example it's 6 pages and 5 minutes. You can easily count the pages you see while making a transaction. If you don't know the average transaction time, Google Analytics may tell you, or you can check your web server logs.

For 3) and 4), the Firefox browser can help. Right click on a page on your site, choose Inspect Element, and the Network tab. Then hit CTRL-SHIFT-R to completely refresh the page. You'll see network load times for every element of the page in the list. You want to make sure that you can see transfer sizes in the Transferred column, as otherwise files might be served from a cache which can mess up your calculations. You might see some scripts and other resources come from servers other than your site, so you can type the domain name for your site in the filter box on the left. To see the Duration column, right click any column header and select Timings -> Duration from the pop up menu. Your screen should look like this:

![google processes a properly configured nginx with google analytics for picture upload]()

The Firefox Network tab for this page, showing Duration and number of Requests from queue-fair.com

Files used in the display of your pages can come from a number of different sites, so you want to also use the filter in the top left to just show those from your site - but only if you are sure that those files from other sites are not the reason for slow page loads, or part of your bottleneck.

Firefox counts the requests for you in the bottom left of the display, and shows 36 HTTP requests for just this one page.

You need to do this for every page in your transaction flow - count the total and divide by the number of pages to find the average number of HTTP requests for each page, number 3) in our list. You can see now why the number of child requests for each HTML page is such a key factor for how much traffic your web server can handle.

For number 4), you need to look at the Duration column and find the average for all the HTTP requests for all your pages. If you're not sure, assume half a second - there's a lot of uncertainty in this anyway (see below).

Doing the math

Let's give some example numbers. We've already said there are six dynamic pages in the example server process flow, which is 1), and that the average transaction time is five minutes, which is 2). Let's assume 36 HTTP requests per page for 3), and half a second for the server processing time for each HTTP request, which is 4).

With those numbers, a server that can handle 500 Concurrent Requests can handle 500 / (0.5 seconds) = 1000 HTTP requests per second, which is 60,000 HTTP requests per minute, when it's completely maxed out.

Over the five minute transaction time, it can handle 5 * 60,000 = 300,000 HTTP requests. Seems like a lot, right?

But, for each visitor, there are six pages with an average of 36 HTTP requests each, so that's 6 * 36 = 216 requests

So, the 300,000 HTTP request capacity can in theory handle 300,000 / 216 = 1,389 Concurrent Users

Gotcha #2: Web Servers Get Slower With Load

Hey, that's great! We thought we could only have a queue rate of 100, but 1,389 / 5 minutes = 278 visitors per minute, so we can have a higher queue rate!

Well, probably not. For one, your visitors won't neatly send requests at intervals of exactly half a second, as the above calculation assumes. More importantly, you'll have been measuring your input data when the site isn't busy. Garbage in, garbage out.

When the site is busy, the server takes longer to process requests - you'll have noticed this on other sites when things are busy, that you're waiting longer for pages. This increases the average time your server takes to process a single HTTP request (4), which decreases the maximum throughput. So take the 278 visitors per minute and halve it. Then halve it again. You're probably realistically looking at about 70 new visitors per minute at maximum load.

Other confounding factors include caching, which means your visitors' browsers may not need to make every single request for every single page - this tends mean the server needs fewer resources and can increase the number of new visitors per minute your server can handle. Load balancers that distribute load across several servers, and serving static content rather than dynamic pages can also speed up your server process, as each server requires fewer resources.

You'll also find that not all the pages take the same time to complete, as some require fewer resources than others to produce and serve. Database searches, search queries and updates take the longest, so you will have a bottleneck somewhere in your process where people pile up, waiting for credit card details to be processed and orders stored, or waiting for availability to be checked. Every transaction flow has a slowest step so there is always a bottleneck somewhere, and there is always a maximum value answer to the question how many concurrent users can a web server handle - and there may be several limits involved. In that case, you want to set your Queue Rate low enough to ensure that your server has cpu time capacity to process enough people concurrently for the slowest step in your process so that people don't pile up there. Otherwise your webserver can literally grind to a halt.

So what do I do?

Our experience is that, going into their first sale, everybody overestimates the ability of their servers to cope with high volumes of traffic.

Everybody.

Accurately pinpointing the average session duration and end user performance during slow or peak traffic isn't for the faint hearted. The best thing to do is run a proper load test, with 'fake' customers actually going through the order process while load testing exactly as they would in real life, making the same HTTP requests in the same order, with the same waits between pages when load testing as you see in real life, and keep an eye on your processor load, IO throughput and response times as you ramp up the number of virtual visitors. You can use Apache JMeter for this (we also like K6 for lighter loads or slower machines), but whatever tool you use it's time consuming and tricky to mimic the behaviour of every single user in exactly the right way (especially with the complexities of caching). Even then, take your max number and halve it.

In the absence of that, err on the side of caution.

You can easily change the Queue Rate for any Queue-Fair queue at any time using the Queue-Fair portal. Start at 10 visitors per minute, or your transaction rate on a more normal day, see how that goes for a little while after your tickets go on sale, and if all looks good, your processor load is low, your sql database is fine and (above all) your pages are responsive when you hit CTRL-SHIFT-R, raise it by no more than 20 percent, wait a bit, and repeat. You'll soon find the actual rate you need during this 'load balancing' (see what we did there?), and remember, from a customer experience point of view, it's fine to raise the Queue Rate as this causes the estimated waits that your customers in the queue are seeing to reduce, and everyone is happy to see a response time delivering a shorter estimated wait.

What you want to avoid doing is setting the Queue Rate too high then be in the position of having to lower it, as this a) means people using the site experience slow page loads, and b) causes the estimated waits to increase. All the people in your queue will sigh!

Gotcha #3: Increasing the rate too quickly after a queue opens

Remember, you will have a bottleneck somewhere in your order process - every transaction has a slowest step - and you'll get multiple sessions piling up there. What you don't want to do is get a minute into your ticket sale, see that your server processor load is well below its maximum number, and raise the rate. Your visitors probably haven't got as far as the "Buy Now" button. You want to wait until your sql database is reporting new orders at the same or similar rate as your Queue Rate and make your measurements and responsiveness tests then. Remember that every time you increase the rate, it will take that same amount of time for the extra visitors to reach your bottleneck, so you won't be able to accurately assess how your server performs at the new rate until after that time has elapsed.

Gotcha #4: Snapping your servers

We've already discussed how it's best to increase the Queue Rate gradually once your queue has opened. You are probably aware that your servers do have a limit that cannot be exceeded without the system crashing and may even be aware of what the limit is - but what you may not know is that as the load is approaching this limit, there is usually very little sign - often just a few errors or warnings, or a processor load above 80%.

When web services fail they tend to 'snap' or seize up very quickly. This is normally because once your system can no longer process requests as quickly as they come in, internal queues of processing build up. Your system then has to do the work of processing, managing and storing its internal queues as well as the requests, and that's what tips servers over the edge. Very quickly. Once that happens, your servers may for a time be able to respond with an error page, but this doesn't help you because the visitors that see it will immediately hit Refresh, compounding the load.

Even before then, if it's taking more than about a second for the visitors to see your pages, they hit Refresh. When a visitor hits refresh, your webserver doesn't know that visitor is no longer waiting for the response to the original request. If both the original and the refresh request are received, your webserver will process them both. That means more work for your webserver, even slower responses for all your visitors, and more people hitting refresh, resulting in a vicious cycle that snaps your webserver before it even starts to send error responses.

So, don't push your servers any harder than you need to. Going for that last 20% of cpu time capacity is usually not worth the risk. If the queue size shown in the Queue-Fair Portal (the yellow Waiting figure and line in the charts) is decreasing or even just increasing more slowly, minute by minute, and the wait time shown is 50 minutes or less, then you are processing orders fast enough and the queue will eventually empty and stop showing Queue Pages automatically, without you having to do anything, and without you having to tell your boss that you pushed it too hard and broke it. You'll get there eventually so long as the speed of the Front of the Queue is higher than the number of Joins every minute (both of which are shown in the Queue-Fair Portal) - the turning point is usually at least a few minutes into each event. If you are selling a limited-quantity product, you will probably sell out before the turning point is reached.

The good news is that if you accidentally do set the Queue Rate too high and your servers snap, Queue-Fair can help you get up and running quickly - just put the queue on Hold until your servers are ready to handle visitors again. In Hold mode, people in the queue see a special Hold page that you can design before your online event. No-one is let through from the front of the queue when it is on Hold, but new internet visitors can still join the queue at the back, to be queued fairly once the blockage is cleared, which will happen very quickly because Queue-Fair is protecting your site from the demand. The Hold Page is a superior user experience to setting the Queue Rate really low, especially if you update it to tell the visitors what time you expect the Queue to reopen, which is easy to do with the Portal page editor, even when hundreds of thousands of people are already in the queue - and in Hold mode you can even let them through one at a time with Queue-Fair's unique Admit One button if you need to while your system recovers from its snap.

So, if you do find your servers need to take a break during your event, the Hold page is just what you need for that, and will help your servers recover more quickly to boot.

Conclusion

In this article we've explained why a rate-based queue is always the way forward, and given two methods to calculate the rate you need, but unless you've done full and accurate virtual visitor load testing on your entire transaction flow, and are really super extra mega certain about that, our advice is always the same:

- Start with a Queue Rate set to 10, or your transaction rate on a more normal day.

- Watch your processor load and other performance indicators.

- Wait until new orders are being recorded in your sql database at the same or similar rate as your Queue Rate.

- Hit CTRL-SHIFT-R on your pages to check responsiveness. If it takes more than a second to get the whole page, you're maxed out and it's not safe to increase the Queue Rate.

- If safe, increase the Queue Rate by no more than 20%.

- Go back to Step 2, and wait again.

- Once the queue size is decreasing or is steadily increasing less rapidly every minute, and the wait time shown to new arrivals is less than 50 minutes it doesn't need to go any faster.

- Sit back and relax! Queue-Fair's got you covered.

If you are selling a limited quantity product, you don't need to pay attention to Step 7 either.

That's for your first queue, when you don't know the actual maximum Queue Rate your system can support. For subsequent queues, once you've measured the Queue Rate that your system can actually handle, you might be able to use the same figure again - but only if nothing has changed on your system. In practice your system is probably under constant development and modification, and you may not know how recent changes have affected your maximum Queue Rate - so why not start at half your previous measured figure and repeat the above process?

So that's how to use Queue-Fair to make your onsale all nice and safe, and remember, it's always better to be safe than sorry.

And now a warning. It is worth noting that the stability of the number of concurrent users on your site - and therefore the stability of your server load - does depend critically on how accurately your Virtual Waiting Room provider sends you the number of visitors that you want each minute, and this is therefore a key factor when you choose a Virtual Waiting Room platform. Because we provide the most accurate Virtual Waiting Room in the world, nobody stops your servers flooding better than Queue-Fair.

And now a warning. It is worth noting that the stability of the number of concurrent users on your site - and therefore the stability of your server load - does depend critically on how accurately your Virtual Waiting Room provider sends you the number of visitors that you want each minute, and this is therefore a key factor when you choose a Virtual Waiting Room platform. Because we provide the most accurate Virtual Waiting Room in the world, nobody stops your servers flooding better than Queue-Fair.